Overview

We present the HANDS24 challenge for hands based on AssemblyHands, ARCTIC, OakInk2 and UmeTrack. To participate in the challenge, please fill the Google Form and accept the terms and conditions.

Winners and prizes will be announced and awarded during the workshop.

Please see General Rules and Participation and Four Tracks below for more details.

Timeline

| July 15 2024 (Opened) | Challenge data & website release & registration open |

| around August 5 2024 (Please refer to each track page for details) | Challenges start |

| September 8 2024 | Registration close |

| around September 15 2024 (Please refer to each track page for the specific deadline) | Challenge submission deadline |

| September 20 2024 | Decisions to participants |

| September 25 2024 | Technical Report Deadline |

General Rules and Participation

We must follow the general challenge rules below and more rules can be found on each offical track page.

-

To participate in the challenge, please fill the Google Form and accept the terms and conditions.

-

Please DO use your institution's email address. Please DO NOT use your personal email address such as gmail.com, qq.com and 163.com.

-

Each team must register under only one user id/email id. The list of team members can not be changed or rearranged throughout the competition.

-

Each team should use the same email for creating accounts in the evaluation server, and use the team name (in verbatim) in the evaluation servers.

-

Each individual can only participate in one team and should provide the institution email at registration.

-

The team name should be formal and we preserve the rights to change the team name after discussing with teams.

-

Each team will receive a registration email after registration.

-

Teams found to be registered under multiple IDs will be disqualified.

-

For any special cases, please email the organizers.

-

-

The primary contact email is important during registration.

-

We will contact participants via emails once we have an important update.

-

If there are any special reasons or ambiguities that may lead to disputes, please email the organizers first for explanation or approval. Subsequent contact may result in disqualification.

-

-

To encourage fair competition, different tracks may include limits like overall model size, training dataset etc. Details can be found in each track page.

-

Teams may use any publicly available and appropriately licensed data (if allowed by the track) to train their models in addition to the ones provided by the organizers.

-

The daily and overall submission number may be limited based on tracks.

-

The best performance of a team CAN NOT be hidden during the competition. Hiding the best performance may result in warning or even disqualification.

-

Any supervised/unsupervised training on the validation/testing set is not allowed in this competition.

-

-

Reproducibility is the responsibility of the winning teams and we invite all teams to advertise their methods.

-

Winning methods should provide their source code to reproduce their results under strict confidentiality rules if requested by organizers/other participants. If the organizing committee determines that the submitted code runs with errors or does not yield results comparable to those in the final leaderboard and the team is not willing to cooperate, it will be disqualified, and the winning place will go to the next team in the leaderboard.

-

In order for participants to be eligible for competition prizes and be included in the official rankings (to be presented during the workshop and subsequent publications), information about their submission must be provided to organizers. Information may include, but not limited to, details on their method, synthetic and real data use, architecture and training details.

-

For each submission, participants must keep the parameters of their method constant across all testing data for a given track.

-

To be considered a valid candidate in the competition, the method has to beat the baseline by a non-trivial margin. A method is invalid if there is no significant technical changes. For example, if you simply replace a ResNet18 backbone with a ResNet101 backbone, it is not counted as a valid method. The organizers preserve all rights to determine the validity of the method. We will invite all valid teams to advertise their methods via 2-3 page technical report, and or poster presentation.

-

Winners should provide a 2-3 page technical report, winner talk, and poster presentation during the workshop.

-

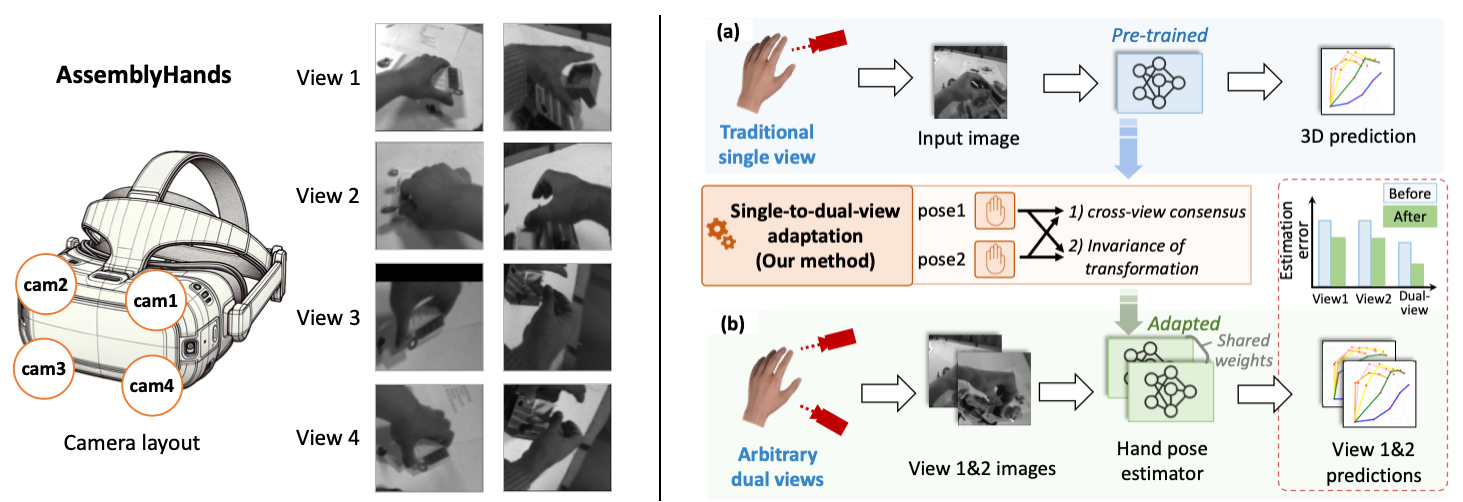

AssemblyHands-S2D (2nd edition)

AssemblyHands is a large-scale benchmark with accurate 3D hand pose annotations to facilitate the study of egocentric activities with challenging hand-object interactions. The 1st edition of this challenge at ICCV'23 featured egocentric 3D hand pose estimation from a single-view image and made significant progress on the single-view estimation.

At ECCV’24, we offer an extended AssemblyHands challenge for multi-view settings, dubbed single-view-to-dual-view adaptation of 3D hand pose estimation (S2DHand). Given a pre-trained single-view estimator, participants are asked to develop unsupervised adaptation algorithms to arbitrary-placed dual-view images, assuming camera parameters and keypoint ground-truth of these views are not given. This adaptation would bridge the gap of camera settings more flexibly and allow us to test various camera configurations for better multi-view hand pose estimation. The details of the challenge rules, data, and evaluation are as follows.

Rules

- Only use the provided pre-trained weights for adaptation. In order to align the backbone capacity across submissions, DO NOT increase the backbone model size or use other backbone networks.

- DO NOT use keypoint ground-truth of validation and test sets from AssemblyHands in any ways, e.g., re-training

- DO NOT use annotated camera extrinsics from AssemblyHands in any ways, including selecting training samples with extrinsics.

- DO NOT use the validation set for training or fine-tuning.

- DO NOT use any other data and annotations from Assembly101.

- Participants only allow the use of two-view images during adaptation.

- We reserve the right to determine if a method is valid and eligible for awards.

Metric: We use wrist-relative MPJPE for each dual-camera pair.

Submission: : Please follow this submission instructions: [ECCV2024-AssemblyHands-S2D]. You can find test images and metadata, and an example of a submission file. Please compress your prediction json into a zip file and upload it to the submission server: [CodaLab]. Our baseline result after adaptation is listed as the name "LRC".

Update Notes:

(Aug 2): We updated the eval server and submission code.

(July 4): We're constructing the dataset and evaluation server tailored for this challenge. Check the

S2DHand project for the reference of this task.

If you have any questions regarding task, rules, and technical issues, please contact via the hands group email. Challenge committee: Ruicong Liu, Takehiko Ohkawa, and Kun He

Check out the following links to get started

Baseline method (S2DHand): https://github.com/ut-vision/S2DHand_HANDS2024

Submission code: https://github.com/ut-vision/ECCV2024-AssemblyHands-S2D

Leaderboard: https://codalab.lisn.upsaclay.fr/competitions/19885

AssemblyHands dataset: https://assemblyhands.github.io/

ARCTIC

Humans interact with various objects daily, making holistic 3D capture of these interactions crucial for modeling human behavior. Most methods for reconstructing hand-object interactions require pre-scanned 3D object templates, which are impractical in real-world scenarios. Recently, HOLD (Fan et al. CVPR’24) has shown promise in category-agnostic hand-object reconstruction but is limited to single-hand interaction.

Since we naturally interact with both hands, we host the bimanual category-agnostic reconstruction task where participants must reconstruct both hands and the object in 3D from a video clip, without relying on pre-scanned templates. This task is more challenging as bimanual manipulation exhibits severe hand-object occlusion and dynamic hand-object contact, leaving rooms for future development.

To benchmark this challenge, we adapt HOLD to two-hand manipulation settings and use 9 videos from ARCTIC dataset's rigid object collection, one per object (excluding small objects such as scissors and phone), and sourced from the test set for this challenge. You will be provided with HOLD baseline skeleton code for the ARCTIC setting, as well as code to produce data for our evaluation server to evaluate.

Important Notes

- Submission deadline for results: September 15, 2024 (11:59PM PST)

- Results will be shared during the HANDS workshop at ECCV 2024 on September 30, 2024

Rules

- Participants cannot use groundtruth intrinsics, extrinsics, hand/object annotations, or object templates from ARCTIC.

- Only use the provided pre-cropped ARCTIC images for the competition.

- The test set groundtruth is hidden; submit predictions to our evaluation server for assessment (details coming soon).

- Different hand trackers or methods to estimate object pose can be used if not trained on ARCTIC data.

- Participants may need to submit code for rule violation checks.

- The code must be reproducible by the organizers.

- Reproduced results should match the reported results.

- Participants may be disqualified if results cannot be reproduced by the organizers.

- Methods must show non-trivial novelty; minor changes like hyperparameter tuning do not count.

- Methods must outperform the HOLD baseline by at least 5% to be considered valid, avoiding small margin improvements due to numerical errors.

- We reserve the right to determine if a method is valid and eligible for awards.

Metric: We use hand-relative chamfer distance, CD_h, (the lower the better) as the main metric for this competition. It is defined in the HOLD paper. For this two-hand setting, we average the left and right hand CD_h metrics.

Support: For general tips on processing and improvement on HOLD (see here). For other technical questions, raise an issue. Should you have any confusion regarding the ARCTIC challenge (e.g., regarding to the rules above), feel free to contact zicong.fan@inf.ethz.ch.

Check out the following links to get started

HOLD project page: https://zc-alexfan.github.io/hold

HOLD code: https://github.com/zc-alexfan/hold

Challenge instructions: https://github.com/zc-alexfan/hold/blob/master/docs/arctic.md

Leaderboard: https://arctic-leaderboard.is.tuebingen.mpg.de/leaderboard

ARCTIC dataset: https://arctic.is.tue.mpg.de/

OakInk2

This challenge focuses on the synthesis of physically plausible hand-object interactions that leverage object functionalities. The goal of the challenge is to utilize demonstration trajectories from existing datasets to generate hand-object interaction trajectories that are sufficient for completing the specified functions in a designated physical simulation environment. The participants need to synthesize trajectories that can be rolled out in the simulation environment. These trajectories need to utilize the object's functions to achieve the task goals from the given initial state of the task. We use the OakInk2 dataset (Zhan et al. CVPR'24) as the source of demonstration trajectories in this challenge. OakInk2 contains hand-object interaction demonstrations centered around fulfilling object affordances. In this challenge, we provide the transferred demonstrations from human hand (MANO) to a dexterous embodiment (ShadowHand) for the IsaacGyn environment. This challenge provides the following resources:

- A toolkit for setting up the simulation environment;

- Relevant object assets and task initial conditions;

- Raw demo trajectories transferred from corresponding primitive task in OakInk2.

- (Method 1) Code snippets and corresponding instructions for rolling out trajectories in the simulation environment. (Only inference, no need for training) plus model weights if the submitted code requires them to run.

- (Method 2) Full simulator states of all synthesized trajectories.

- Upload a link pointing to a public code repository containing the executable code and weights;

- Upload an archive containing the executable code and weights;

- Upload an archive containing the full simulator states of all synthesized trajectories.

Check out the following links to get started

OakInk2 project page: https://oakink.net/v2

Toolkit for loading and visualization https://github.com/oakink/OakInk2-PreviewTool

Challenge instructions: https://github.com/kelvin34501/OakInk2-SimEnv-IsaacGym/wiki

Simulation environment startup https://github.com/kelvin34501/OakInk2-SimEnv-IsaacGym/

Multiview Egocentric Hand Tracking Challenge (MegoTrack)

In XR applications, accurately estimating the hand poses from egocentric cameras is important to enable social presence and interactions with the environment. The problem of hand pose estimation on XR headsets presents unique challenges that differentiate it from existing problem statements. Participants will be provided with calibrated stereo hand crop videos and MANO shape parameters. The expected results in this challenge are MANO pose parameters and wrist transformations. The submitted pose parameters are supposed to be used in combination with the provided MANO shape parameters to calculate keypoints and MPJPE, in contrast to the existing protocol that allows the methods to directly produce tracking results in the form of 3D keypoints or full MANO parameters, which does not penalize undesirable changes in hand shape. Also, participants will be provided with calibrated stereo hand crop videos, assuming each video captures a single subject. The expected result is the MANO shape parameters, which will be evaluated using vertex errors in the neutral pose.

This year, we are excited to launch two distinct tracks:

- Hand Shape Estimation: Determine the hand shape using calibrated stereo videos.

- Hand Pose Estimation: Track hand poses in calibrated stereo videos, utilizing pre-calibrated hand shapes.

To encourage developing generalizable methods, we’ll adopt two datasets featuring different headset configurations: UmeTrack and HOT3D, the latter was recently introduced at CVPR.

Check out the following links to get started

Challenge page: https://eval.ai/web/challenges/challenge-page/2333/overview

Hand Tracking Toolkit: https://github.com/facebookresearch/hand_tracking_toolkit

UmeTrack dataset: https://huggingface.co/datasets/facebook/hand_tracking_challenge_umetrack

Contact

hands2024@googlegroups.com